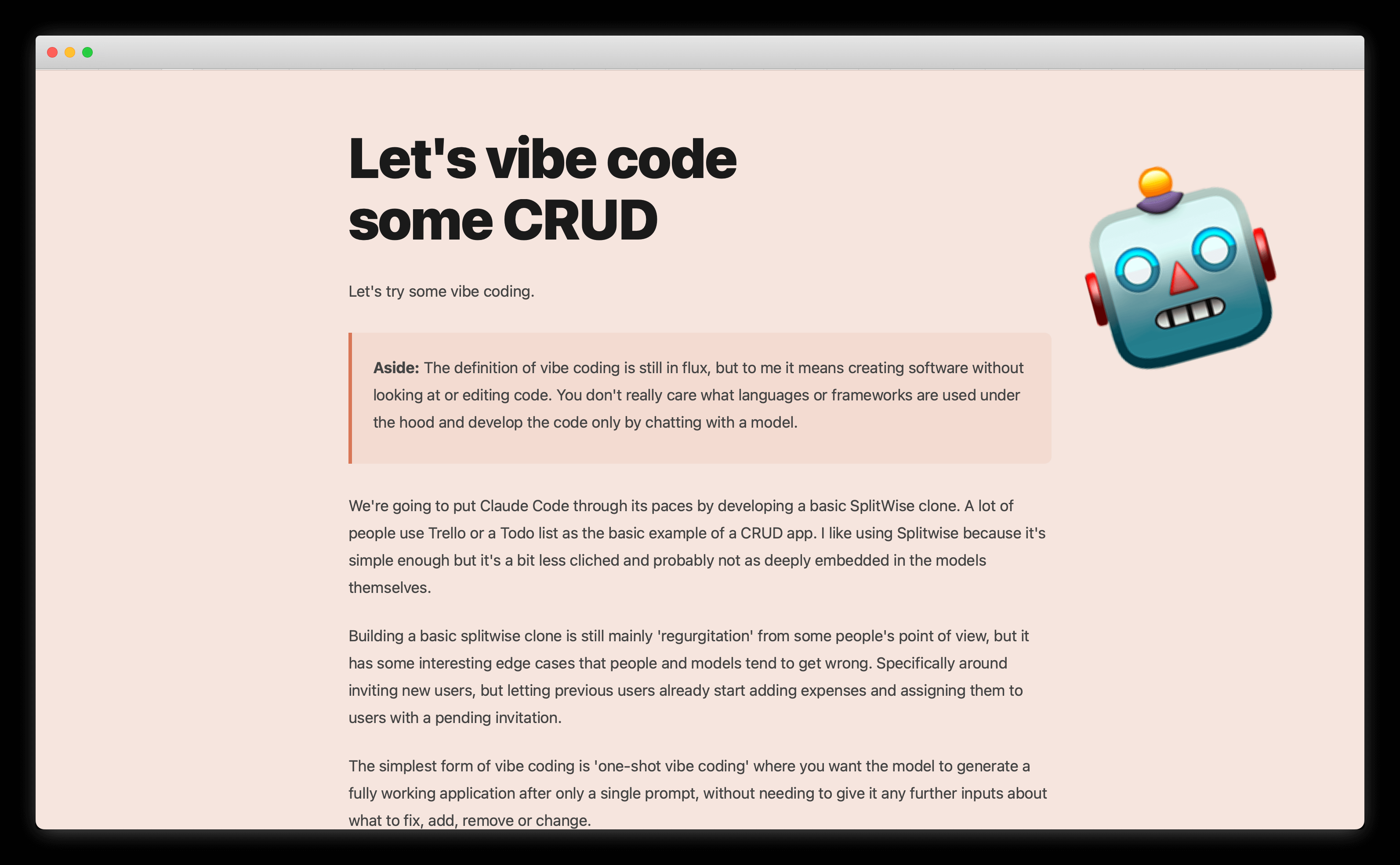

#Let's vibe code

some CRUD

Let's try some vibe coding.

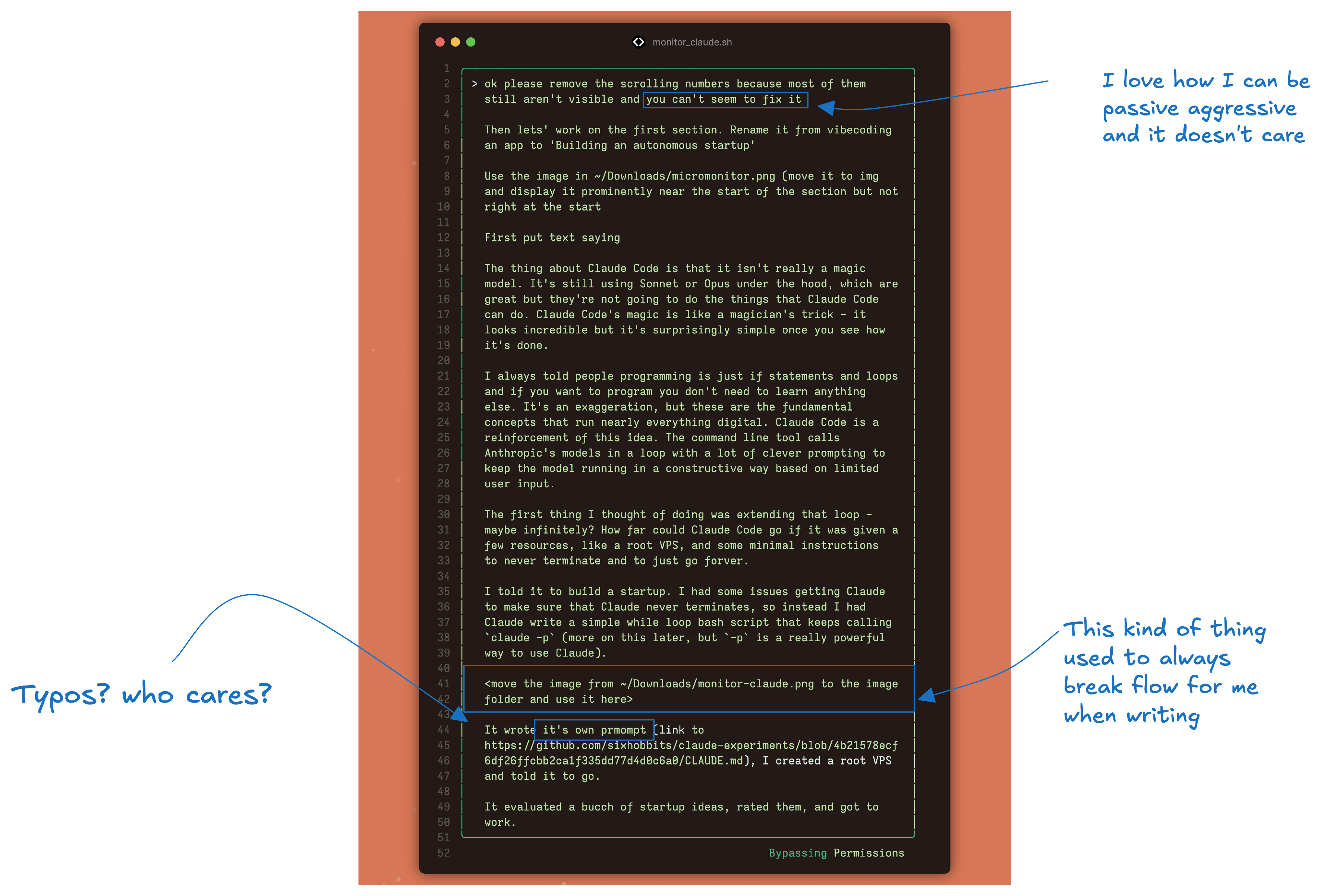

Aside: The definition of vibe coding is still in flux, but to me it means creating software without looking at or editing code. You don't really care what languages or frameworks are used under the hood and develop the code only by chatting with a model.

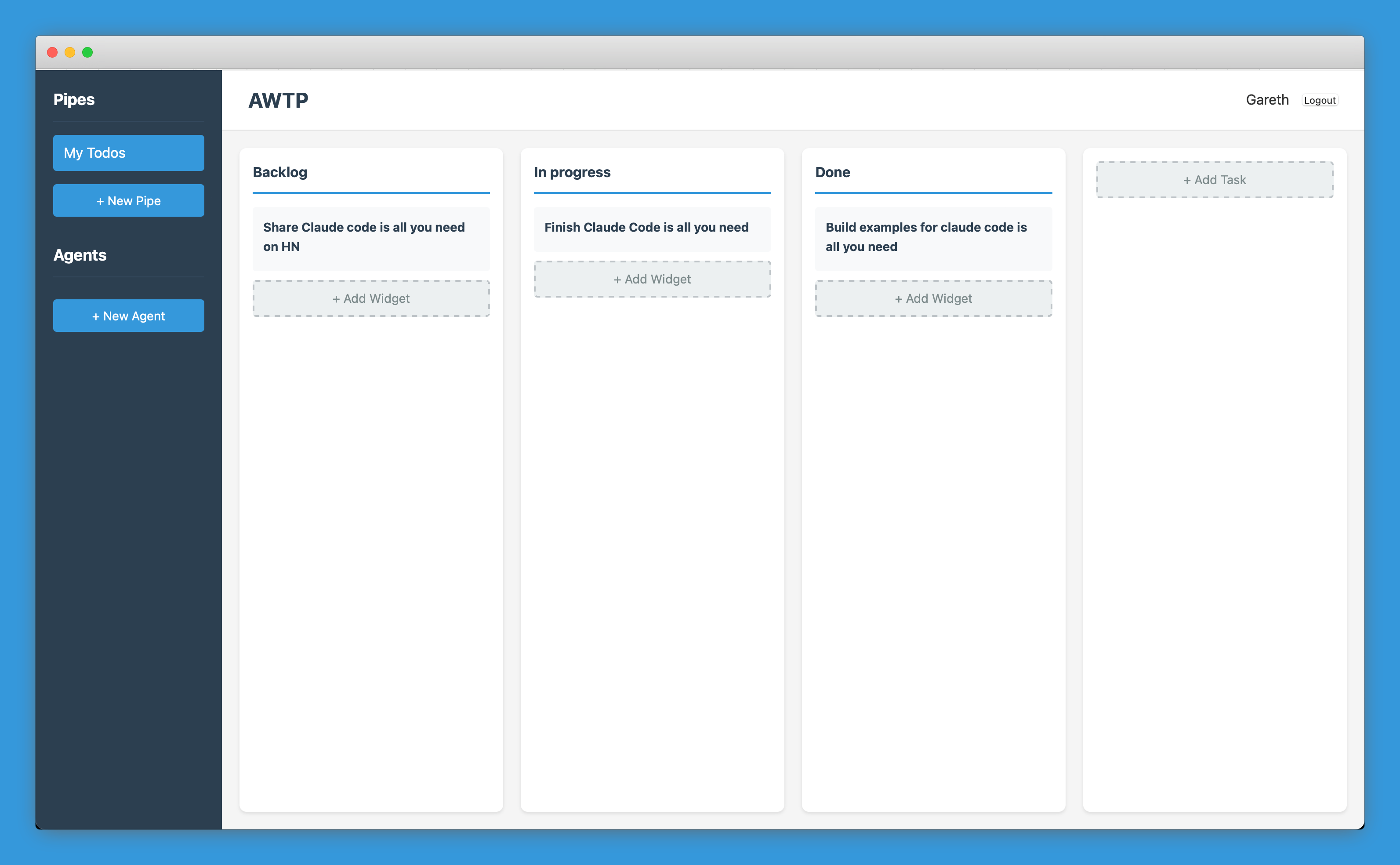

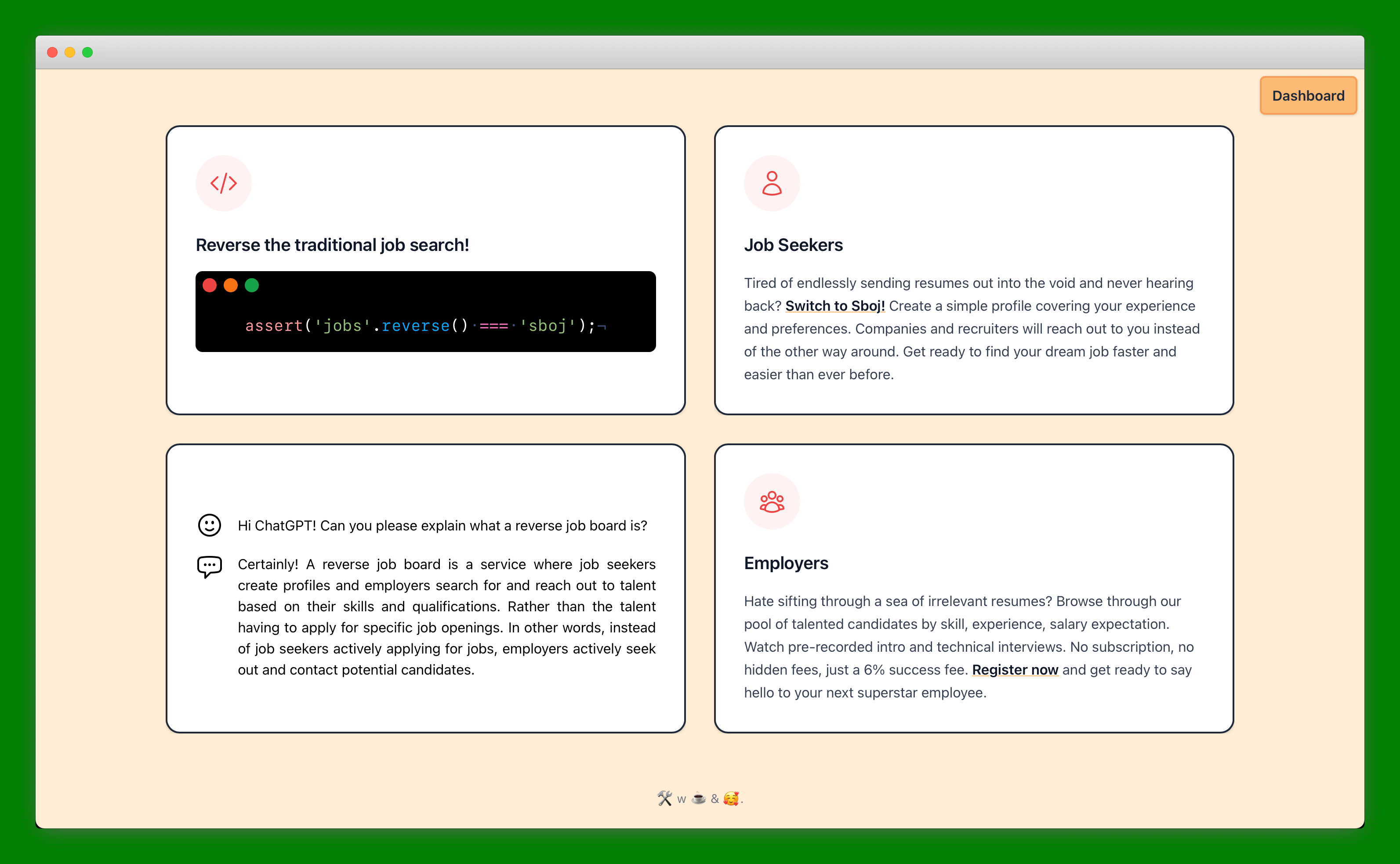

We're going to put Claude Code through its paces by developing a basic SplitWise clone. A lot of people use Trello or a Todo list as the basic example of a CRUD app. I like using Splitwise because it's simple enough but it's a bit less cliched and probably not as deeply embedded in the models themselves.

Building a basic splitwise clone is still mainly 'regurgitation' from some people's point of view, but it has some interesting edge cases that people and models tend to get wrong. Specifically around inviting new users, but letting previous users already start adding expenses and assigning them to users with a pending invitation.

The simplest form of vibe coding is 'one-shot vibe coding' where you want the model to generate a fully working application after only a single prompt, without needing to give it any further inputs about what to fix, add, remove or change.

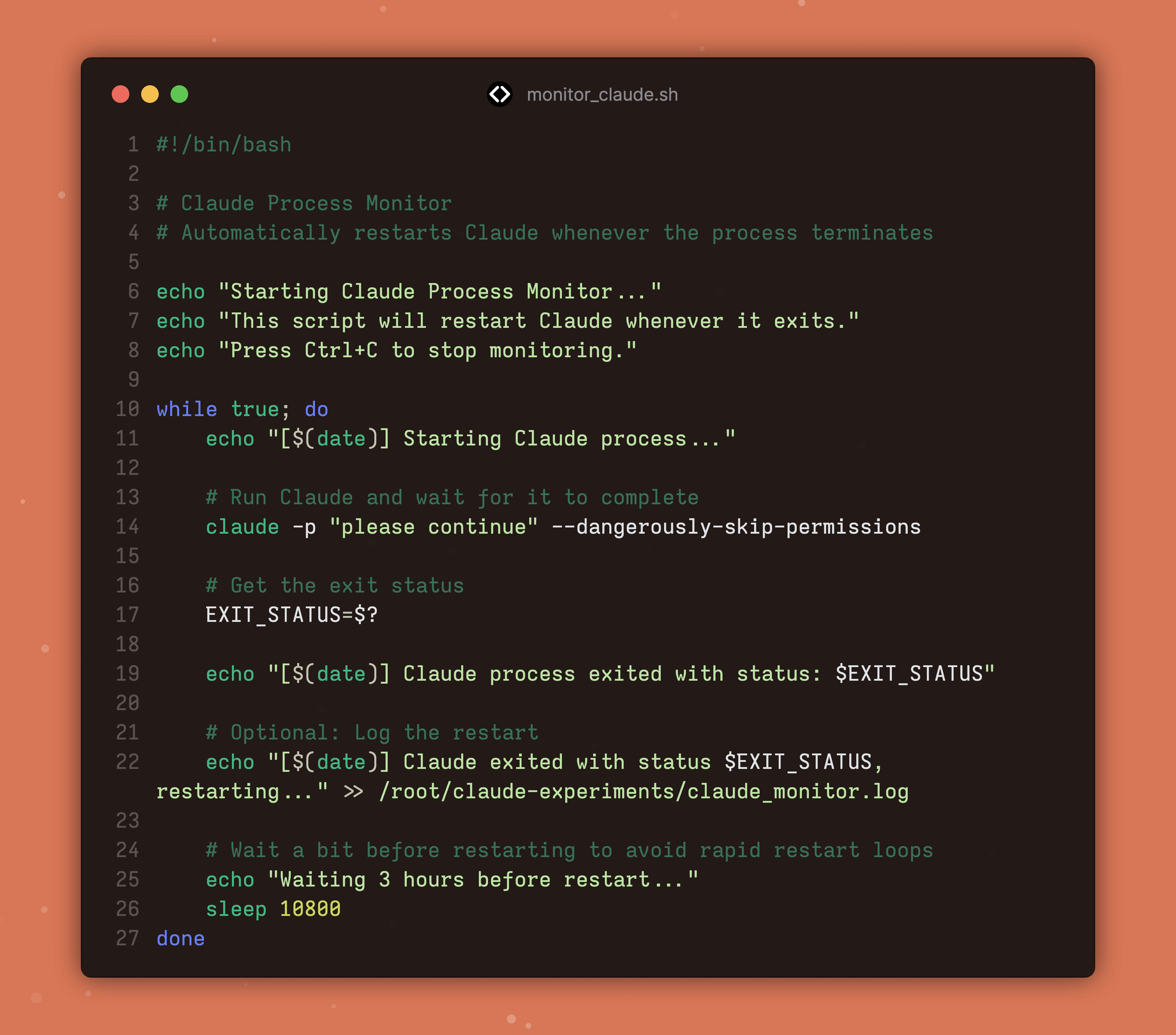

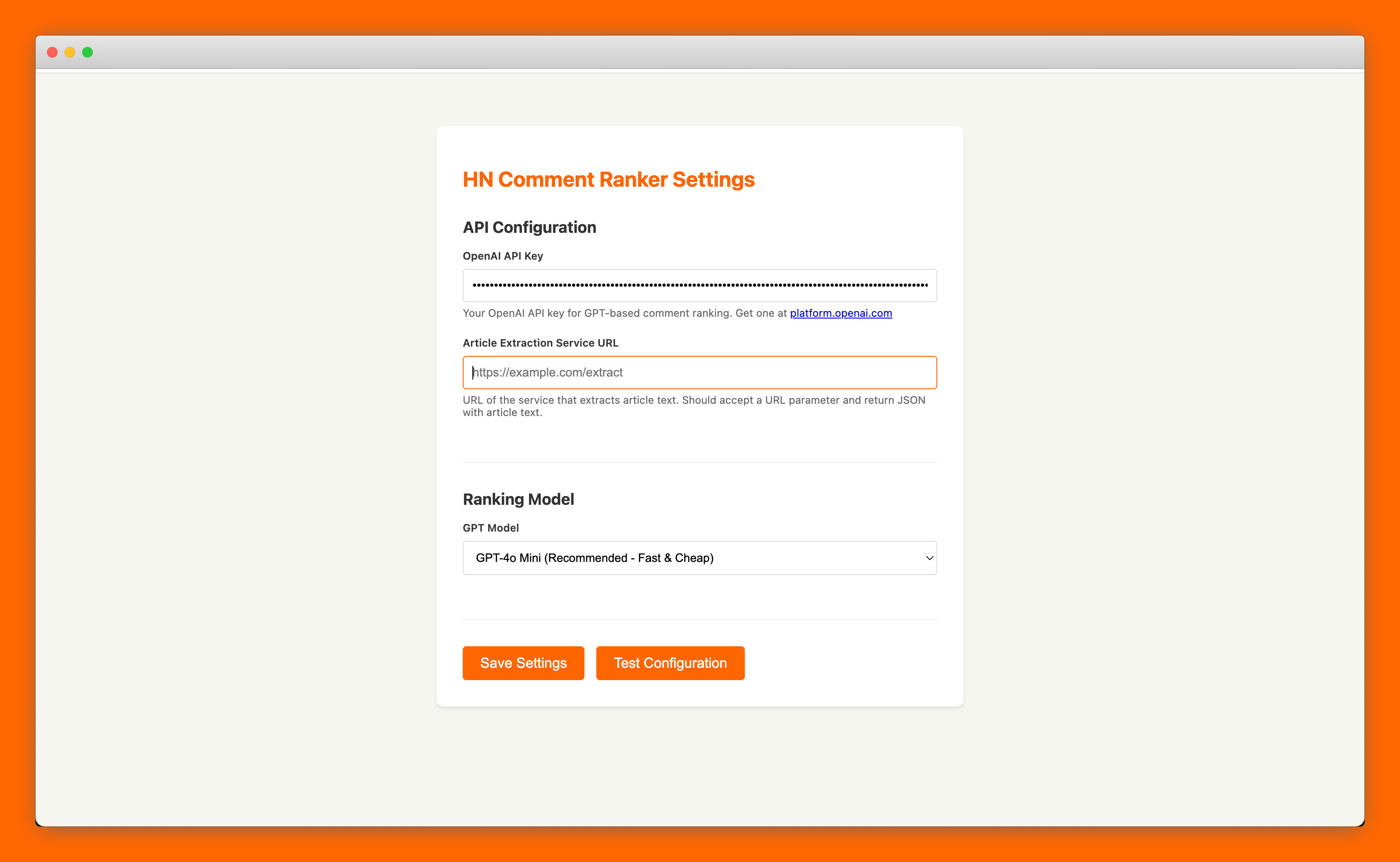

I cheated a bit because the prompt I used to one-shot this is based a bit on earlier attempts where the model did things that I didn't want, but the app shown below and at smartsplit.verysmall.site is the output of claude -p "Read the SPEC.md file and implement it" My SPEC.md file is about 500 words (shown in full a bit later).

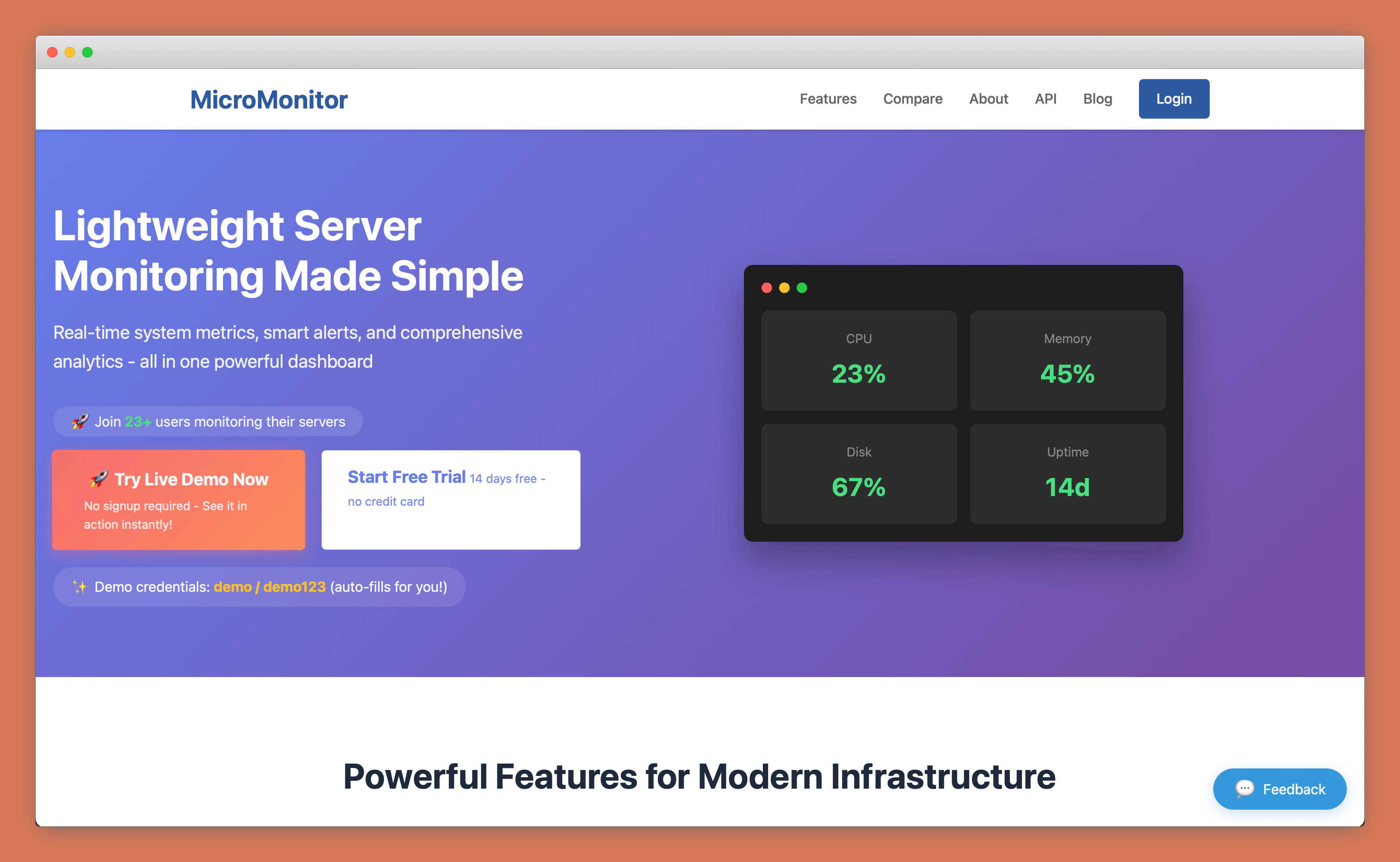

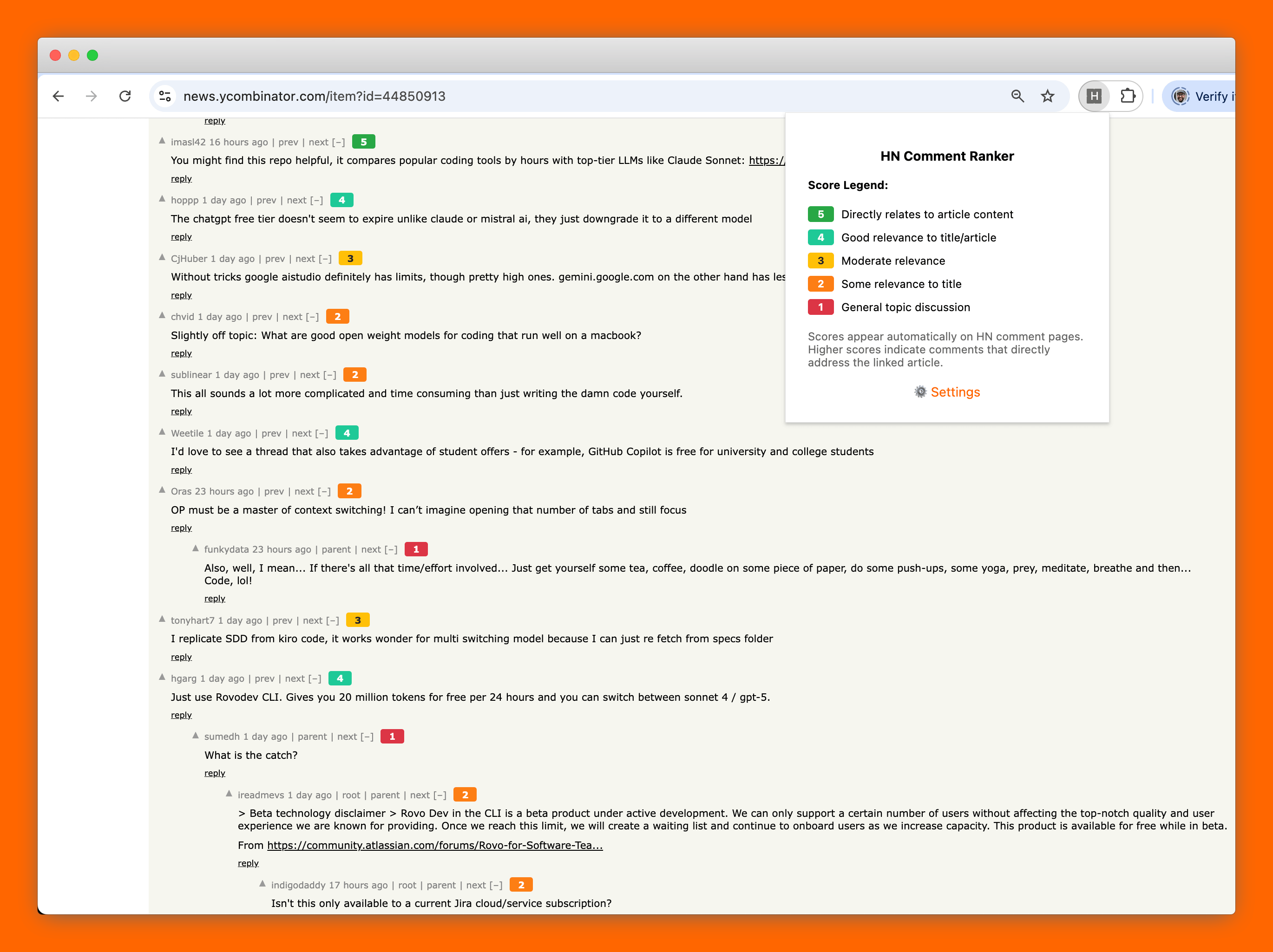

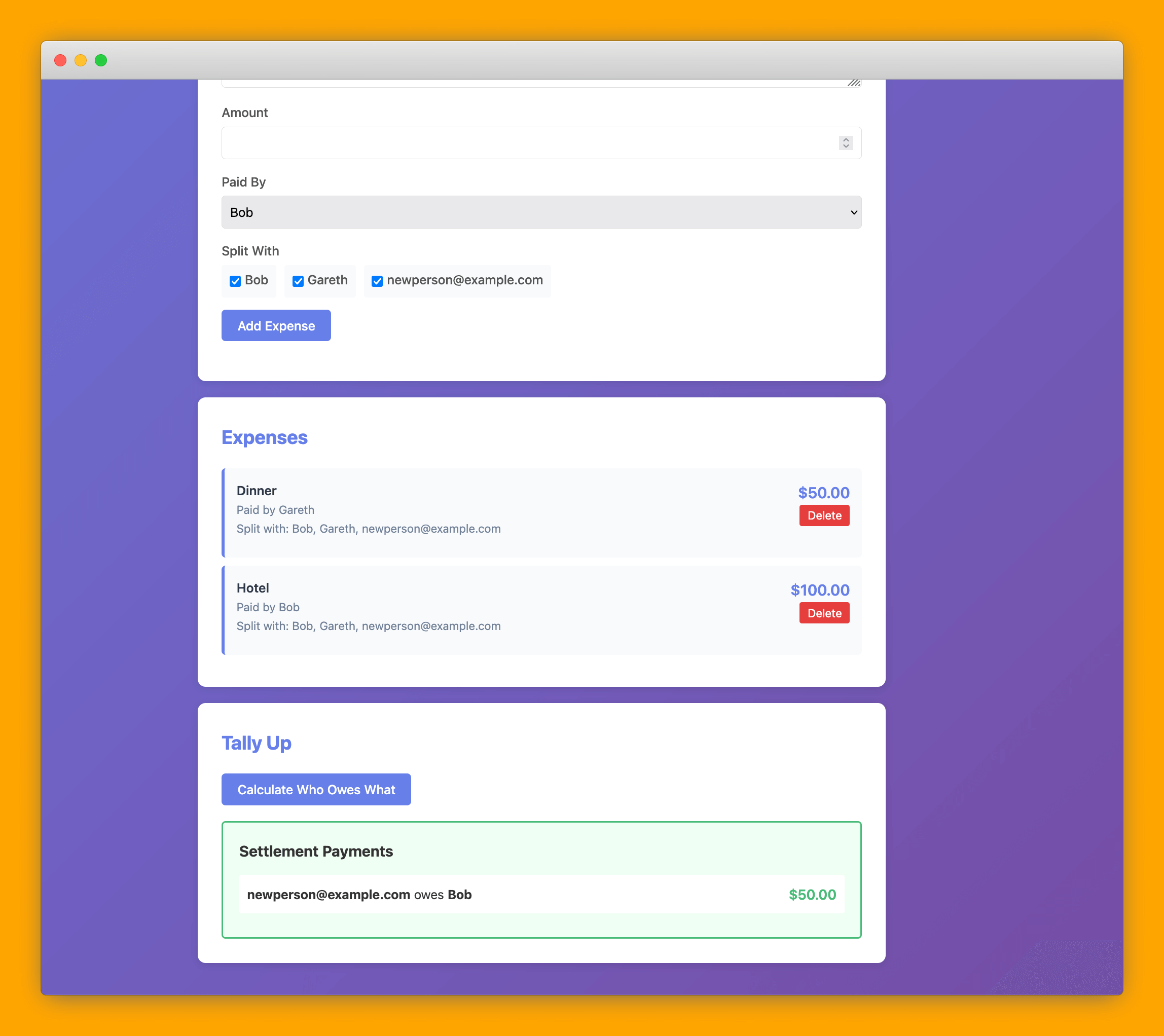

Depending on how much you've been using LLMs for coding in the last few weeks or months, you'll probably either be surprised or unimpressed that we can get a fully working CRUD application with moderately complicated functionality in one prompt. You can see in the screenshot above that it has some nice touches like filling in names automatically for registered users, but falling back to their email address for non-registered ones.

I haven't extensively tested it, but the few cases I tried and spot checked worked flawlessly.

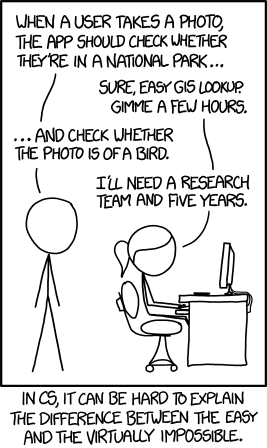

If you're surprised that it works this well, then you should know that a) these models are still inconsistent — they can perform wildly differently based on the same or similar inputs, and b) they're very sensitive to the quality and quantity of input.

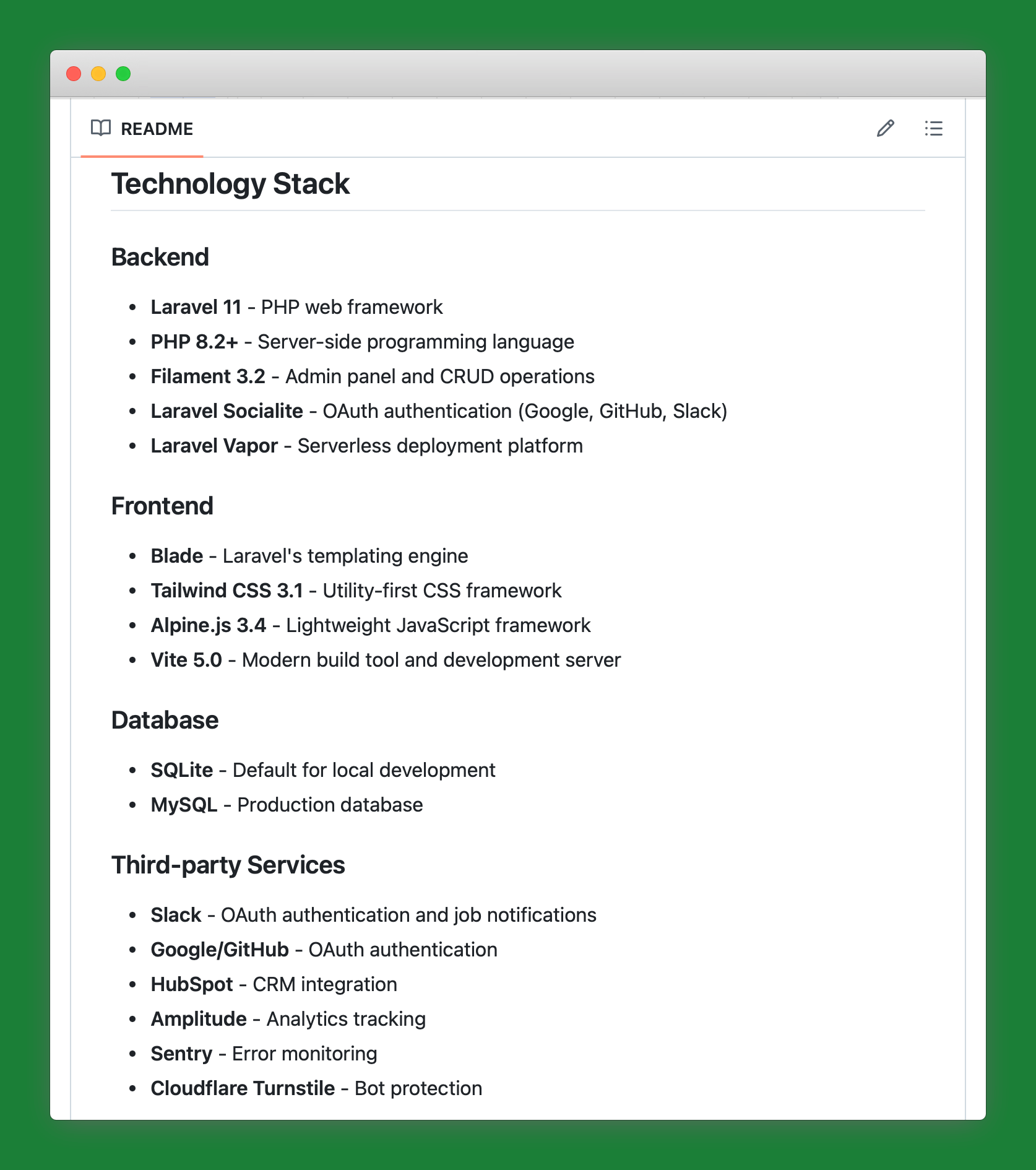

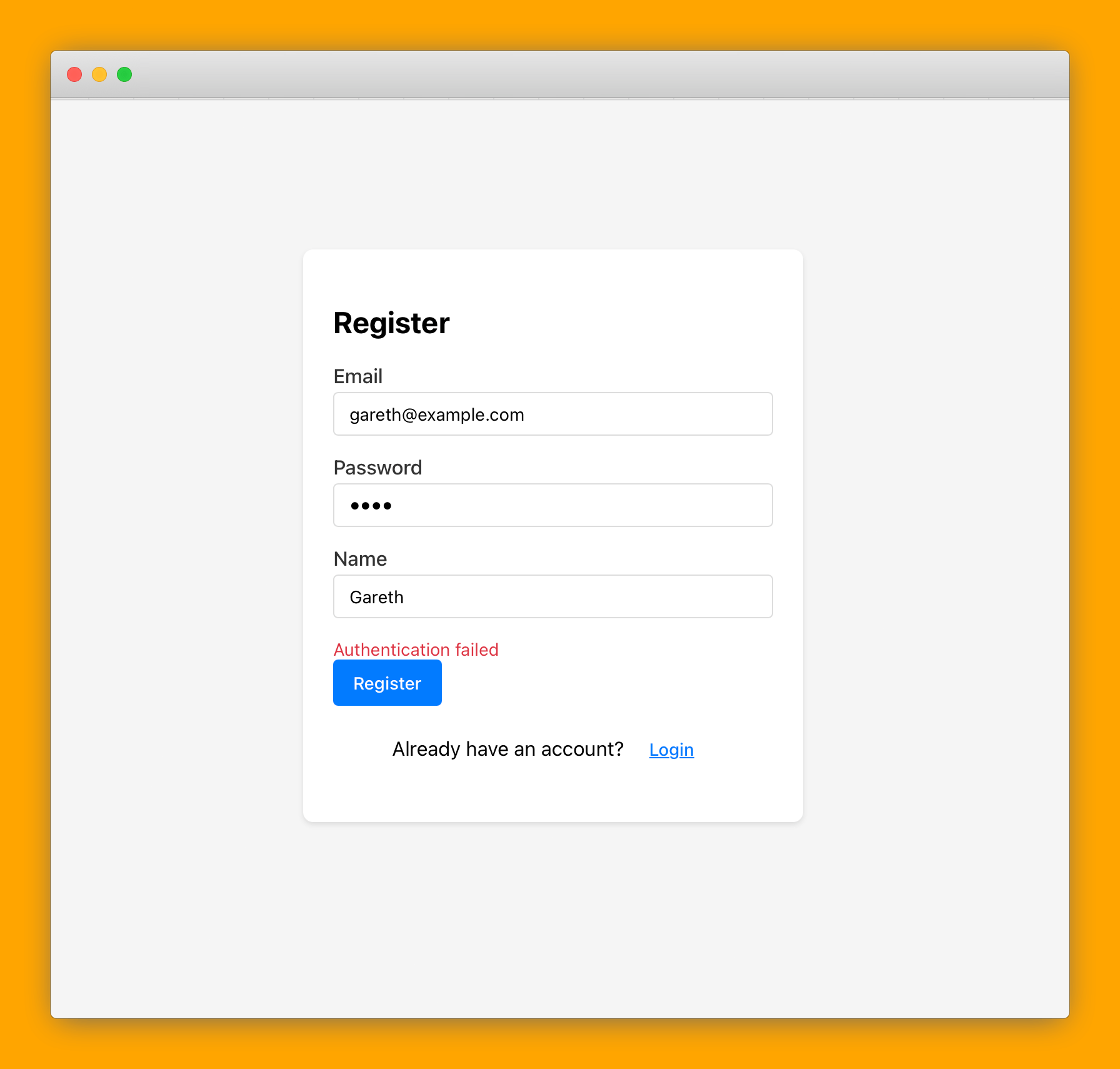

For example, here's a version that's completely broken, with not even basic registration working. The prompt I used for this version was nearly identical, but contained a bit less guidance about what technology stack to use, so the model decided to go overboard and overcomplicate everything to the point where it couldn't even build basic functionality.

#A tale of two vibe-coded codebases

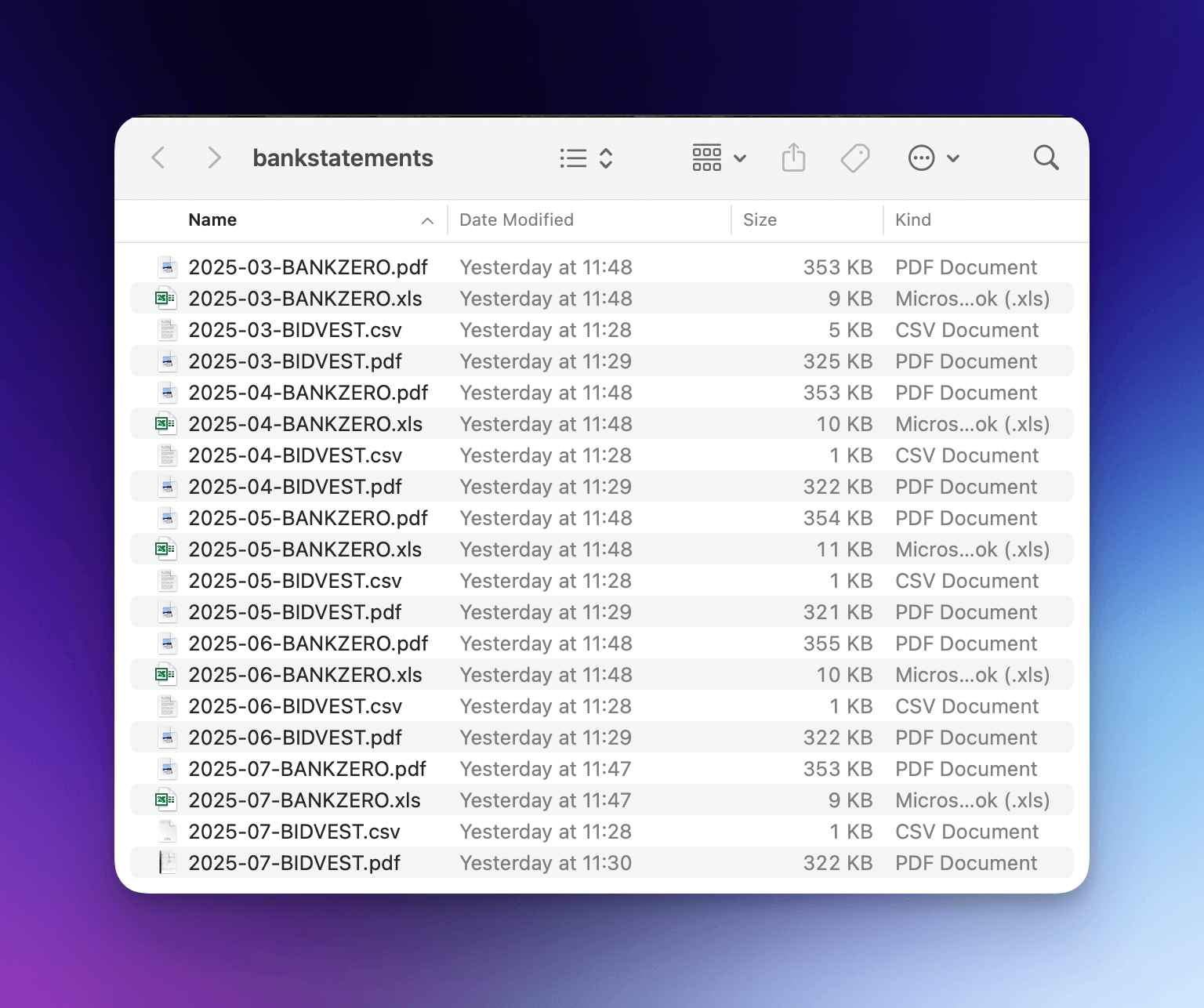

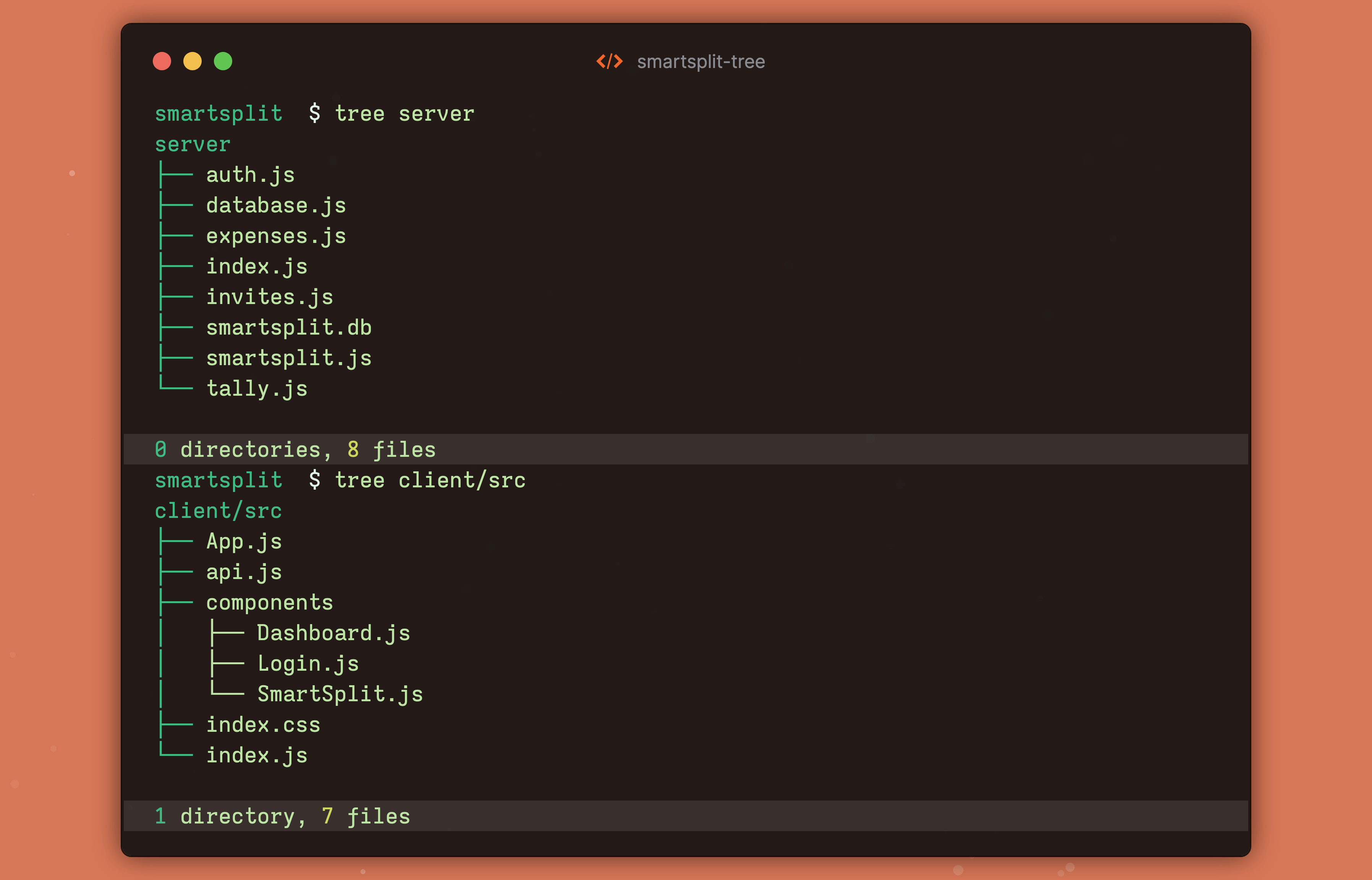

Let's take a look at these two projects and the prompt that created them. The working version is a 900 line index.php file that contains the entire app. The broken version is a NodeJS project split into a client and a server. It's not much longer in terms of lines of code - about a 1000 lines of non-dependency code split up over 15 files. But after you run npm i on the broken version it pulls in 500MB (!!) of dependencies.

Here's the full SPEC.md. This prompt I gave Claude Code is a SPEC.md file. It's nearly the same in both cases, except for the PHP one I tell it to keep things simple, stay away from frameworks, and just write raw SQL. In the broken version, I let it do whatever it wants.

smartsplit-php [master+] $ cat SPEC.md

SmartSplit is a basic CRUD application like SplitWise that lets users split expenses and figure out who needs to pay what to who.

Specifically it has the following features

* A user can sign up with a name, email address, and password

* A user can create a new SmartSplit and give it a name

* A user can add expenses which have a name, an optional description, and an amount

* When adding an expense, a user can specify who paid for it, and who it should be split with

* IMPORTANT: a user can specify that other users are the person who paid, not only the logged in user

* IMPORTANT A user can add others users as the payer or as people to split with even if they haven't joined yet

* For users who haven't joined yet, the user can select them by the invited email address

* Invites are not sent by email, there is no email. THey're just used for unique usernames to manage access

* Once they've joined and added their name, the name should be shown everywhere instead of the email address

* When adding a new expense, the default is that it's split between all users (joined and invited)

* The adder can remove some users if some of them did not take part in that expense

* All splits are always even for simplicity, divided equally between all people specified for that expense

* A user can invite another user to a SmartSplit by specifying their email address.

* Each SmartSplit gets a unique 8-digit alphanumeric code that makes easy to share URLs

* Any user can create a new smartsplit, or join an existing one if they're logged in with an email address that was invited

* Even if that user doesn't yet have an aaccount on SmartSplit, they'll have access to any SmartSplits they're added to after signing up (This bit is important). If the invitee has added their email address, they should have access automatically.

* Any user can add, remove, or edit any expenses within a SmartSplit that they have access to

* Any user can press 'Tally up' which should calculate who needs to make what payments to who to split everything

## Implementation details

* Email addresses are usernames, all registration and login is done with only an email and password

* Passwords are hashed but no extra secrutiy like length or weak passwords or confirmed passwords is applied

* Once a user has registered, they are automatically logged in

* The login and register flow is the same, but the user is registered if they don't have an account and logged in if they do

## Techncial details

* Use a single index.php script for the entire app.

* SQLite for all database functionality.

* No frameworks, just vanilla javascript and css

* No ORMs, use raw SQL

* use a clean minimalist elegant design that's mobile responsive

Those last five bullet points are the only difference between the two prompts, so in some sense they represent a transformation of 500MB of broken code into 30KB of working code.

Yes, it's a toy example and some people will say that the JavaScript one scales better or something. I'm not here to fight. I hate PHP too, but I'm using it more often for fully vibe coded apps because LLMs are very good at it. Frameworks and abstractions are for humans in the end of the day, not robots, and often they get in the way of Vibecoding instead of being helpful.